An Holistic discovery of Index

or in a less pedendic version, what's indexing ?

A few months ago, I had one of the more interesting interviews in my short career. It was for a backend position and consisted mostly of open-ended questions. One question, in particular, stuck with me and will be the focus of this article: “What’s indexing?”

A few months ago, I had one of the more interesting interviews in my short career. It was for a backend position and consisted mostly of open-ended questions. One question, in particular, stuck with me and will be the focus of this article: “What’s indexing?”

This question came up during a discussion about how to optimize an SQL query, where I proposed creating an index—something I had rarely done before. My response to the question revealed my limited understanding of the subject. I mumbled words like B+ tree and hashmap, hoping to confuse my interviewer into believing I knew more than I actually did. It didn’t work.

The thing is, I could have said that an index in a database is like an index in a book, but in a technical interview, this seems like an overly simplistic answer. If not for the interviewer, at least for me. So, what was I missing to paint a clear picture of what an index really is? I had some insight, but I still lacked a more comprehensive understanding.

What’s a database again ?

Let’s start from the beginning: what’s a database? Well, it’s a place to store data. The simplest implementation would be an array where we store the information we want to keep, and for good mersure we could write it to the file system for persistence1.

As you might guess, this isn’t the approach used by modern DBMS (Database Management Systems), which do much more than just store data. They offer speed and reliability, neither of which is achievable with a simple list.

The first (easier?) challenge is speed. As you might know, searching in an unsorted list is linear O(n)O(n) and therefore quite slow. A simple solution is to use a sorted list, which improves query speed to O(log(n))O(log(n)) if we use something like a binary search. However, maintaining order can be troublesome. How would you insert a new value? Leave space? Copy the array? Fortunately, developers are familiar with this type of issue and solve it using data structures.

Continuing with something familiar, a great example of a parallel to a developer’s daily life is the presence of a HashMap in the database realm, which I think is one of the most well-known and popular data structures.

In a database, the hashtable counterpart is called an SSTable (Sorted Strings Table). It’s a simple data structure, similar to a log, that provides a persistent, ordered, immutable map. It is used in a number of databases, particularly NoSQL databases.

In the figure, you can see how this SSTable is represented, with the key “Pierre” and its corresponding value.

- When we have a new value, we append it.

- When compacting to reduce wasted space, we keep only the most recent value.

- In case of deletion, we use a special value called a tombstone.

This is an elegant and practical approach, but we still face the hard part: sorting.

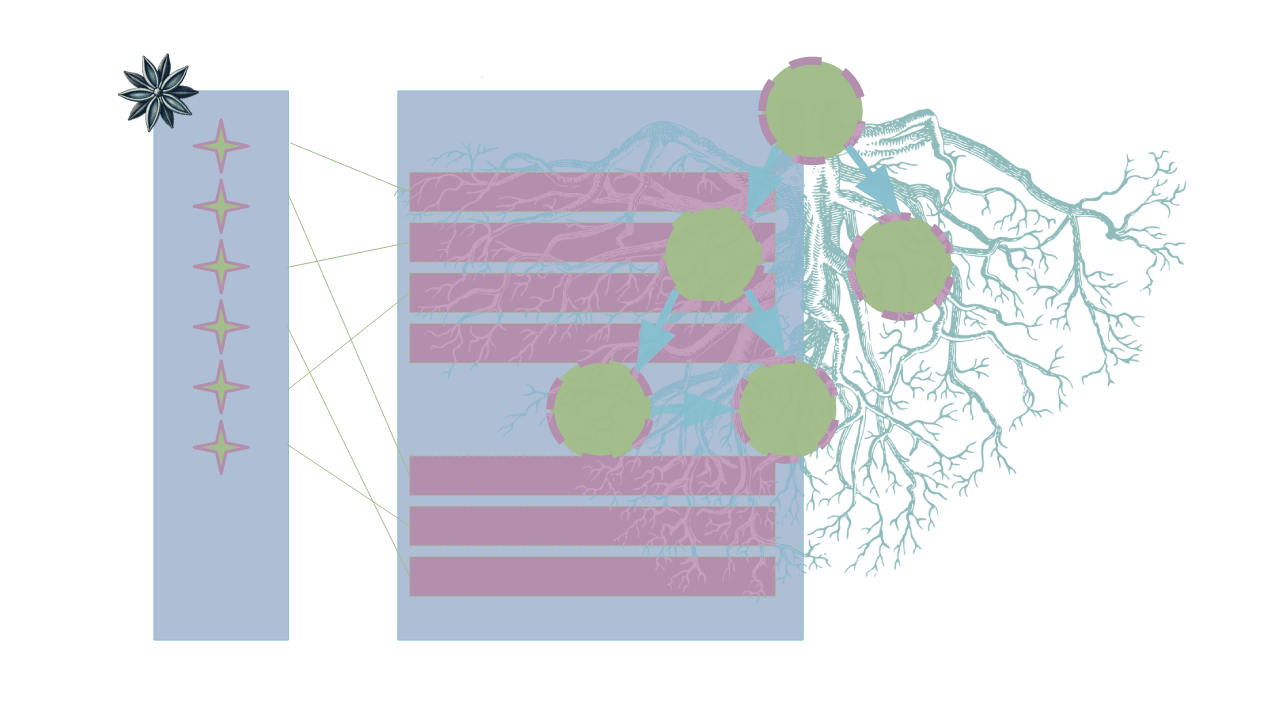

So, how do we sort our SSTable? We transofrm it into an ordered tree, like an AVL tree(a self-balancing tree). The important thing to note with balanced trees is that it ensure an insertion time of O(log(n)), and more importantly for our SStable, the transformation process is O(n). Traversing the tree will generate our precious SSTable. This method, which combines a tree structure (in memory) with SSTables (on disk), is called an LSM Tree. But where is the tree in the term “LSM Tree”? You might ask, as I did, the short answer is it’not, the name is as far as I found very misleading. However, if you’re interested in trees, B+ trees might suit you better, as they are the standard in traditional SQL databases like PostgreSQL.

If I trust the Wikipedia page2: the B stands for “Boeing, balanced, between, broad, bushy, or Bayer”. To the modern reader, I reassure you that even if the B originally stood for Boeing, it wouldn’t be here, because modern databases often crash.

But I digress. Let’s go with the more representative abreviation,“balanced,” because that’s what the tree aims to maintain. A B+ tree ensures balance by controlling the number of keys a node can hold, which is called the degree. When a node exceeds this limit, it splits, redistributing keys to maintain balance across the tree. The algorithm is complex, with numerous edge cases and constraints to maintain. The most important constraint is that the leaf nodes, which are at the bottom of the tree, must always be at the same level. If you’d like to experiment with a B+ tree, here’s an interactive page. The “+” in B+ tree is simpler to explain: it indicates that the leaf nodes are connected.

Yes, we do talk a lot about data structures in this article, but they are at the heart of this indexing conversation. This was essentially what was missing from my initial explanation, so let me help my not-so-younger self with a proposal:

“indexes in databases are abstractions built on top of data structures that behave like a book index.”

I like this explanation as it doesn’t hide the complexity but still provides what I feel is a holistic response.

Let’s continue to understand why I feel having a holistic view of indexing is important. As you may have heard, indexes come at a cost, and a rule of thumb I’ve heard is: “You shouldn’t add an index for a table with fewer than 1,000 rows.” But why? And more importantly, how much indexing is done by default?

The reason indexing is costly becomes obvious when we keep the B+ tree in mind. Double indexing implies double writes to the data structure, and this doubles the workload. Additionally, if you’ve played with the interactive page I mentioned earlier, you might have noticed how much rearrangement occurs when a tree is modified (see figure 1.2). A single insert can have a significant impact, and the same applies to deletions. This explains why databases sometimes use tombstones, which indicate that a record is deleted (the is_deleted collumn), serving both as a backup precaution and an optimization. In contrast, an LSM tree is more efficient for inserts, as they cost little more than a simple append.

The second question is more subtle: what indexing is done by default? In the case of PostgreSQL, anything that is unique, such as primary keys and columns with a unique constraint, is automatically indexed. Primary keys are particularly interesting because they’re often UUIDs, which are random. This randomness has downsides.

Let me end this article, on this UUID note, with a final example of why understanding indexing is crucial. During an internship, one initiative was to switch from UUIDs to ULIDs, similar to what Shopify did when they increased their insert speed by 50%3 just by switching to ULIDs. The reason is clear if we consider two things:

- ULIDs are ordered by time. The first 48 bits are a timestamp, which means that in our tree, the distance between two nodes will often depend on how close in time they were created.

- PostgreSQL has a caching mechanism called the shared buffer, where it stores recently used pages for quicker access.

With UUIDs, which are completely random, the caching mechanism is rendered almost useless because there’s little chance that another row on the same page will be needed. In contrast, with ULIDs, if for instance you need to change the status of all users from yesterday, you’ll likely get a cache hit, with all the benefits you might imagine. And there are a lot more interesting things with real applications involving indexes and how they are built. For instance, with the latest version of PostgreSQL (17), great improvements to the IN operator were achieved by leveraging the fact that B+ tree leaves are connected.

This article might not introduce many new concepts to seasoned readers, but I see value in connecting ideas and linking theory to practical examples. As emphasized in the introduction, understanding is not just about concepts but also about their arrangement, which is not unlike a data structure.

I now wonder about the downsides of ORMs—or, more precisely, about adding abstraction to what is already a beautiful and efficient system. Some of my colleagues believe that SQL alone isn’t enough and that only using it would slow down development. But adding annotations hides so much from view, and even a simple database has already multiple layers of abstraction—from the query planner to the file system to the kernel. Can we honestly say we understand all of this, plus the layers added by an ORM? And more importantly, do we understand how they are arranged?